Organizations and Markets in Emerging Economies ISSN 2029-4581 eISSN 2345-0037

2023, vol. 14, no. 1(27), pp. 220–241 DOI: https://doi.org/10.15388/omee.2023.14.89

Should Your Chatbot Joke? Driving Conversion Through the Humour of a Chatbot Greeting

Vaida Lekaviciute

ISM University of Management and Economics, Lithuania

https://orcid.org/0000-0003-0341-1684

vaida.lekaviciute@gmail.com

Vilte Auruskeviciene (corresponding author)

ISM University of Management and Economics, Lithuania

https://orcid.org/0000-0002-1563-4052

vilaur@ism.lt

James Reardon

University of Northern Colorado, Monfort College of Business, USA

https://orcid.org/0000-0003-2386-308X

james.reardon@unco.edu

Abstract. Despite the increasing number of companies employing chatbots for tasks that previously needed human involvement, researchers and managers are only now beginning to examine chatbots in customer-brand relationship-building efforts. Not much is known, however, about how managers could modify their chatbot greeting, especially incorporating humour, to increase engagement and foster positive customer–brand interactions. The research aims to investigate how humour in a chatbot welcome message influences customers’ emotional attachment and conversion-to-lead through the mediating role of engagement. The findings of the experiment indicate that conversion-to-lead and emotional attachment rise when chatbots begin with a humorous (vs neutral) greeting. Engagement mediates this effect such that a humorous (vs neutral) greeting sparks engagement and thus makes users more emotionally attached and willing to give out their contact information to the brand.

The study contributes to the existing research on chatbots, combining and expanding previous research on human–computer interaction and, more specifically, human–chatbot interaction, as well as the usage of humour in conversational marketing contexts. This study provides managers with insight into how chatbot greetings can engage consumers and convert them into leads.

Keywords: chatbot, humour, conversational agent, engagement, conversion-to-lead, emotional attachment

Received: 16/12/2022. Accepted: 2/5/2023

Copyright © 2023 Vaida Lekaviciute, Vilte Auruskeviciene, James Reardon. Published by Vilnius University Press. This is an Open Access article distributed under the terms of the Creative Commons Attribution Licence, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Introduction

Sometimes referred to as conversational agents, chatbots are software applications differentiated from other software by their capacity to communicate with users using natural language to resemble a conversation with a real person. Chatbots are progressively displacing human service workers on websites, social media platforms, and messaging apps and are used across several end-user industries, from health and wellness to finance and banking (Yuen, 2022), with the chatbot market projected to register a CAGR of 30.29% by 2027, up from $3.78 billion in 2021 (Mordor Intelligence, 2022).

The continuous advancements in Artificial Intelligence (AI) and natural language processing (NLP) and academic fields of research like human–computer interaction (HCI) are enabling the incorporation of diverse social characteristics into chatbots, elevating chatbots’ appeal to customers. Attributing human characteristics to inanimate objects – a process called anthropomorphism (Van Pinxteren et al., 2020) – has been shown to positively affect consumer behaviour, such as increasing consumers’ trust in products or services (e.g., Waytz et al., 2014). However, the most human-like human brain capabilities, such as a sense of humour, have been overlooked in HCI as they have been considered too subjective and, hence, hardly applicable to a machine.

The use of humour in marketing has been shown to positively impact desired outcomes, such as increased recall of ads (Cline & Kellaris, 2007) and strengthened relationships with business partners (Bompar et al., 2018). This is particularly important for the marketing school focusing on relationships, networks, and interactions (Gummesson, 2017). By incorporating humour into chatbot interactions, businesses may be able to create a more engaging and memorable customer experience. Further research is necessary to fully explore the potential benefits of incorporating humour in chatbot design (Robinson et al., 2020).

Addressing the mentioned knowledge gap and the growing interest from the practitioners, the research aims to investigate how humour in a chatbot welcome message influences customers’ emotional attachment and conversion-to-lead through the mediating role of engagement.

This study contributes to the development of chatbot design and marketing strategies by exploring the potential of humour as a tool for improving user engagement and conversion rates. By investigating the relationship between a chatbot use of humour and its impact on customers’ emotional attachment and conversion-to-lead, this research provides insights into the effectiveness of humour in chatbot design. The findings of this study can help companies optimise their chatbot interactions to enhance user engagement and drive business growth.

From a theoretical perspective, this research contributes to emergent theories on the effects of anthropomorphism on consumer behaviour and the psychological mechanisms that underlie this relationship. Our research shows that positioning chatbots as anthropomorphic can motivate customers’ emotional attachment and conversion-to-lead through the mediating role of user engagement.

Moreover, our research provides valuable insight into conversational commerce from the perspective of humour usage. Chatbots use human-like cues to increase the appeal of anthropomorphic traits. However, minimal research has investigated humour, especially in conversational commerce.

1. Literature Review and Conceptual Framework Development

1.1 Chatbots: The Background

Chatbots are sophisticated computer software that replicates human interaction in its natural form (Zumstein & Hundertmark, 2018). They are intended to resemble an interpersonal chat and have a high level of targeted customisation (Letheren & Glavas, 2017). Chatbots use NLP and sentiment analysis technology to converse with people or other chatbots through text messages or spoken words (Khanna et al., 2015). Using chatbots, consumers may ask questions and issue instructions in natural language and get the information they need in a conversational manner. Other synonyms for chatbots include digital assistants, conversational agents, and intelligent bots.

1.2 Human-Chatbot Interactions

CASA Theory and Anthropomorphism. Developing conversational chatbots with human-like communication behaviours draws on the “Computers Are Social Actors” (CASA) concept (Nass et al., 1994; Gambino et al., 2020). CASA paradigm contends that we frequently attach fundamental human qualities such as personality, emotions, and logical cognition to computers even when they exhibit little or no human-like characteristics (Nass & Moon, 2000). Consequently, robots are seen as social partners capable of meaningful connections. This inclination is referred to as anthropomorphism, and it is defined as “a process of inductive inference, by which humans try to rationalise and predict machines” (Epley et al., 2007 as cited in Van Pinxteren et al., 2020, p. 206).

People commonly personify their electronics by giving them names, assigning feelings, and imposing social standards such as politeness and reciprocity, despite the gadgets falling short of the definition of humanity (Nass et al., 1999). Interestingly, humans’ application of human characteristics to nonhuman agents also influences their views of them. For example, Holtgraves et al. (2007) discovered that computers that address their interlocutor by their first name are seen as more competent. Additionally, Tzeng (2004) demonstrated that computers that express regret for the made errors are seen as being more sensitive and less robotic.

Along with the findings in anthropomorphism research, the CASA theory has aided in creating chatbots that exhibit the same range of communication behaviours people do while establishing relationships (Fink, 2012). Other examples include chatbots that provide social appreciation (Kaptein et al., 2011) and avatars that replicate nodding, head tilting, and eye gazing (Liu et al., 2012). Despite these attempts, conversational agents still do not seem able to create completely satisfying connections with their users (Everett et al., 2017).

Overview of HCI Findings. It has been shown that endowing chatbots with human-like characteristics improves communication and fosters social and emotional connections (Araujo, 2018). Interaction with a human-like chatbot may eventually result in positive attitudes toward the chatbot or the brand that it represents (Araujo, 2018). At the same time, though, humanising agents to an excessive extent leads to unsettling sensations and higher expectations (Ciechanowski et al., 2019).

While many studies on chatbot design focus on improving functional precision (e. g., Han et al., 2021), like speed or accuracy, the literature consistently suggests that chatbots should incorporate social capabilities (e.g., Jain et al., 2018; Đuka & Njeguš, 2021). Further, Neururer et al. (2018) highlight that making a conversational agent likeable to users is essentially a social issue, not a technological one. The problem remains determining which social features are crucial for enhancing chatbots’ communication and social abilities.

1.3 Chatbots in Marketing

Following the logic of Thorbjørnsen et al. (2002), who define interactive marketing as “an iterative dialogue where individual consumers’ needs and desires are uncovered, modified and satisfied to the degree possible” (p. 19), chatbots, by their very conversational nature, should be recognised as a viable marketing tool for establishing and fostering personal relationships with customers.

Digital conversational assistants have tremendous potential to boost customer engagement, which is an essential metric in marketing as it may result in raised conversions and revenue (Kaczorowska-Spychalska, 2019). Avatars, for example, improve online shopping experiences, and both avatars and anthropomorphic chatbots can boost buying intentions (Han, 2021). In fact, anthropomorphic digital communicators may be more compelling than human spokespeople (Touré-Tillery & McGill, 2015) and boost advertising effectiveness (Choi et al., 2001).

1.4 Use of Humour in Chatbots

1.4.1 Influence of Humour in Chatbot Greeting on Engagement. Humour is at the heart of a highly sophisticated cognitive process that separates humans from other species. Few studies exist about how humour affects consumer behaviour within chatbot–human interaction. For example, Ptaszynski et al. (2010) demonstrated that particularly with non-task-oriented chatbots, incorporating humour into the conversation significantly improved the impressions of the chatbot quality and customers’ attitudes toward the chatbot. Research also indicates that chatbot agents who comprehend and use human humour are rated as more pleasant, cooperative, and competent, providing definitive answers and performance than those who do not (Sensuse et al., 2020; Medhi Thies et al., 2017). Further, Babu et al. (2006) also discovered that when a virtual receptionist employed humour in interacting with real users, social discussions rose by up to 50%.

Consumer engagement has become one of the most significant domains in marketing in the last two decades. It denotes a psychological condition resulting from interactive and co-creative consumer encounters with a focal object in central service interactions (Brodie et al., 2011). While the proposed customer engagement definitions differ in engagement objects, e.g., consumer’s brand engagement (Hollebeek et al., 2014), website engagement (Calder et al., 2009), community engagement (Algesheimer et al., 2005), blog engagement (Hopp & Gallicano, 2016), etc., the paper follows the definition of user engagement as a “quality of user experience that describes a positive human-computer interaction” (O’Brien & Toms, 2010, p. 1094) as it investigates human–chatbot interactions explicitly.

Ge (2017) finds positive effects of humour on customer engagement in social media. Moreover, Hoad et al. (2013) described moments in which humour spiked students’ emotional engagement in learning; humour has also been shown to stimulate fun and sustain learning. Dormann and Biddle (2007) found that humour has a high entertainment potential for increasing conversations and other verbal/textual game aspects.

CASA theory implies that humans tend to respond to computers in the same way they do to humans, meaning that people also anticipate their interactions with computers to be effortless and natural. If humour improves human-human connection, as already established, it should have a comparable impact on human-chatbot interaction:

H1: A humorous (vs neutral) chatbot welcome message increases user engagement.

1.4.2 Influence of Humour in Chatbot Greeting on Emotional Attachment. According to Bowlby (1980), an attachment is a target-specific emotional relationship between a person and a particular item. People create emotional attachments to objects, including brands (Kleine et al., 1995) and social chatbots (Xie & Pentina, 2022).

Morkes et al. (1999) found that users who evaluated the system that provided humorous remarks had improved attitude towards system attributes. In addition, anthropomorphism was found to help influence social bonding with virtual agents (e. g., Qiu & Benbasat 2009). It is probable that humour, as an exceptionally human characteristic, increases anthropomorphism and, therefore, could theoretically increase connection or emotional attachment:

H2a: A humorous (vs neutral) chatbot welcome message increases emotional attachment.

1.4.3 Influence of Humour in Chatbot Greeting on Conversion-to-lead. One metric of particular importance to modern companies in evaluating their marketing efforts is conversion-to-lead. A “lead” in marketing is a person who shows interest in a brand’s products or services, which makes the person a potential customer, usually with some way to contact him – a phone number, address, or email (SendPulse, 2021). Converting visitors into leads and starting them on the path to becoming a customer is known as conversion-to-lead (Jotform, 2021).

Businesses have recognised opportunities for converting anonymous customers into “leads” in a scalable way by using chatbots. It is one of the core steps in marketing strategies because once a company can contact the prospect, they can start building a long-term relationship and potentially convert the lead into a sale.

Edlund and Härgestam (2020) articulate that if chatbots are employed to their maximum capacity, more apparent outcomes in cost-effectiveness, resource allocation, consumer engagement, and lead creation might be noticed.

As mentioned, humour can help establish rapport with service staff (Maŕın & Ruiz de Maya, 2013). Lunardo et al. (2018) found that constructive humour positively influences the sellers’ performance. Also, a study (Chiew et al., 2019) found that customers of retail services reported that frontline employees’ humour made interactions with them more favourable. Similarly, incorporating humour into chatbot conversations can improve impressions of chatbots (Ptaszynski et al., 2010).

Humour leads to increased customer evaluations of service encounters, higher conversions and higher advertising effectiveness. Thus, employing the logic of the CASA theory, it is reasonable that humour could similarly positively affect the conversion-to-lead such that when exposed to humorous stimuli, a customer would form favourable evaluations of the brand and/ or chatbot, which would increase the chances of her/his willing to leave her/his contact information:

H3a: A humorous (vs neutral) chatbot welcome message increases conversion-to-lead.

1.4.4 Influence of Humour in Chatbot Greeting on Emotional Attachment via Engagement. Humour is known to elicit positive emotions, such as joy and amusement, which can create a positive emotional state in the customer. This positive emotional state can then lead to increased engagement with the chatbot and, consequently, a greater connection with it.

Positive emotions are associated with greater engagement in various contexts, including online interactions (de Oliveira Santini et al., 2020), while research also suggests that consumer engagement increases connection and emotional bonding (Brodie et al., 2013). Following this idea, it is probable that engagement caused by humour brings the user into a playful state of mind and makes them feel more connected to the chatbot. Therefore, it is reasonable to assume that a humorous greeting by a chatbot can induce positive emotions, leading to increased engagement and emotional attachment:

H2b: Humorous (vs neutral) welcome message of a chatbot increases user engagement, and in turn, engagement positively impacts customers’ emotional attachment.

1.4.5 Influence of Humour in Chatbot Greeting on Conversion-to-lead via Engagement. As previously mentioned, to the best of our knowledge, there are currently no studies investigating the relationship between humor and conversion-to-lead. Ash (2015) claims that humour may give an unexpected break from the standard web experience when applied well. Moreover, Stack (2020) writes that customer engagement is a technique for further qualifying a lead and generating a selling opportunity. If engagement leads to such positive consumer outcomes, especially relating to purchase intention, it is therefore reasonable to believe that engagement has the potential to increase a consumer’s interest in a given brand and therefore enhance the consumer’s willingness to give out their contact information for future dialogues with the brand. Based on this reasoning, the following hypothesis emerges:

H3b: Humorous (vs neutral) welcome message of a chatbot increases user engagement, and in turn, engagement positively impacts conversion-to-lead.

1.5 Conceptual Framework

A relatively recent path that scientists have begun to explore in the literature on chatbot anthropomorphisation is the use of conversing styles and tone of voice that exhibit human likeness (Araujo, 2018; Kull et al., 2021). Thomas et al. (2018) argue that since conversing styles are modifiable inputs to the formation of a digital assistant, further research should be conducted to understand how different communicational styles adopted by a chatbot might influence various behavioural and relational outcomes.

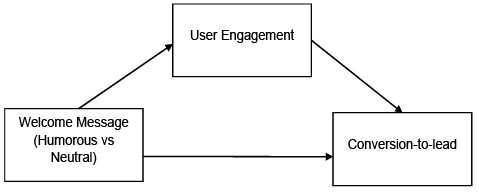

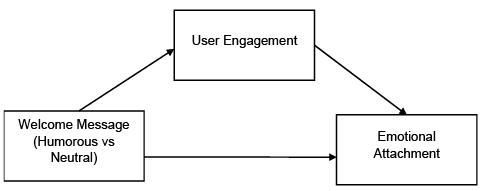

Following their thought, this research explores how humour in chatbots influences consumer behaviour and attitudes. Figure 1 presents the conceptual framework for the research.

Figure 1

Conceptual Framework

|

|

Model 1 |

|

|

Model 2 |

2. Research Methodology

2.1 Research Method

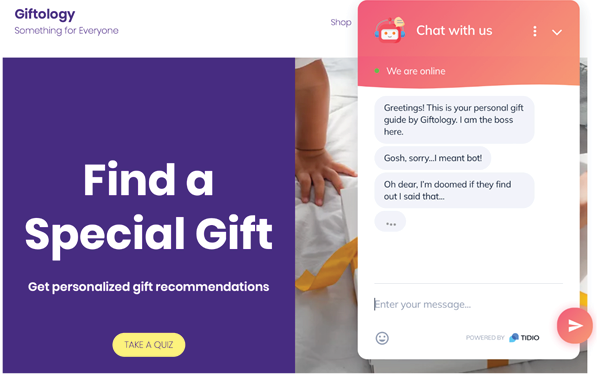

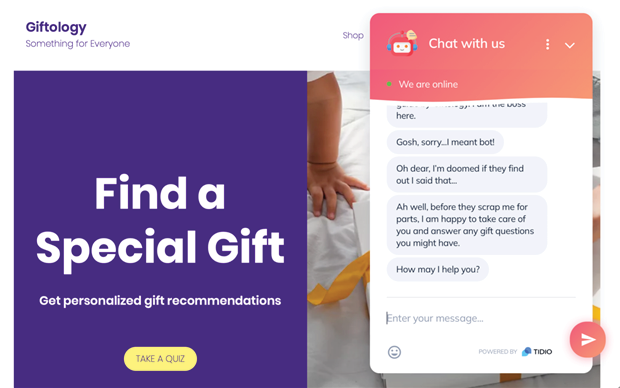

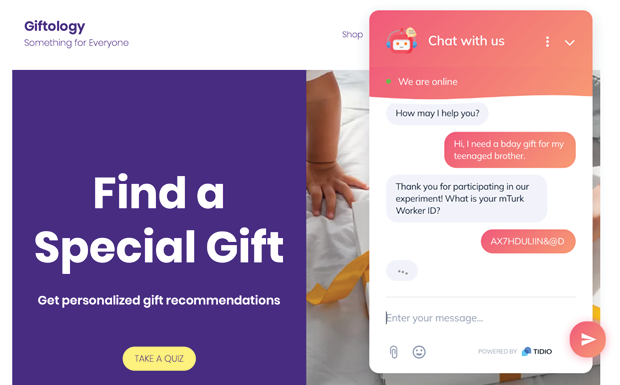

The study employed a true experimental research design by utilising an online platform to implement a real chatbot using current automation technologies. The chatbot was integrated into a fictional e-commerce store setting, and the participants were randomly assigned to either a humorous or neutral welcome message stimuli group.

To conduct the study, a fictional e-commerce website was created, and participants were invited to visit it via a web link. Upon arrival, participants were greeted by a virtual shopping assistant through an automatically prompted chat modal, which contained either a humorous or neutral message, depending on the participant’s group.

After reading the instructions, participants were asked to respond to the chatbot with an open-ended message. The chatbot was designed using the Tidio live chat solution provider’s services to tailor-build an automated digital agent. The chatbot’s visual appearance had an upbeat colour scheme, a friendly-looking animated robot avatar, a title “Chat with us,” and an online status bar saying “We are online”.

To mimic natural conversation, the chatbot presented messages in bite-size instalments, with a built-in Tidio feature showcasing a “typing” symbol and a two-second delay between each message. This delay is a common practice in chat design and helps to create a more natural flow of conversation.

The humorous message used in the study was inspired by a chatbot greeting example given in an online blog post (discover. bot, 2021) and an experimental chatbot greeting used by Kull et al. (2021). The neutral message was constructed in a similar manner, both in terms of content and length.

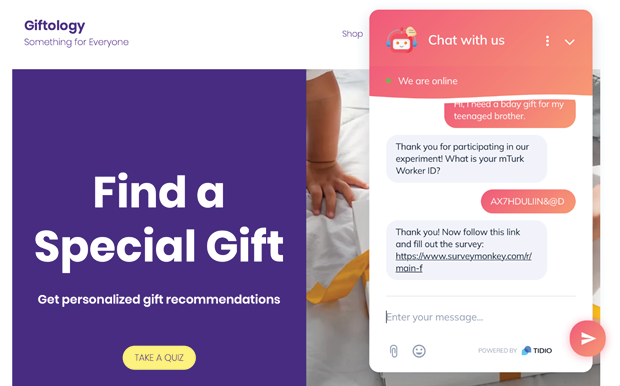

After completing the interaction with the chatbot, participants were given a link to a post-interaction questionnaire on the SurveyMonkey platform. The entire procedure was designed to last 10–15 minutes.

2.2 Sampling Method and Participants

Study participants were recruited using the services of Amazon’s Mechanical Turk (MTurk). Numerous research has evaluated the properties of MTurk respondents’ samples, with excellent levels of internal consistency and inter-rater and test-retest reliability (Berinsky et al., 2012). Using the built-in filters on the MTurk platform, the respondents were targeted to have a greater than 90% Human Intelligence Task (HIT) Approval Rate (%) and location to be set to the United States as qualification requirements. We determined a minimum of 50 participants per experimental condition based on the recommendation by Brysbaert (2019).

2.3 Manipulation Check

To assess whether the chatbot welcome messages were perceived as humorous or neutral, a pre-test was conducted to check for manipulation. Two independent groups were recruited through the MTurk platform and exposed to either humorous or neutral welcome message stimuli. They were then asked to complete a short questionnaire using a 7-point Likert scale to rate the funniness of the chatbot welcome message.

The stimuli were presented in the same format as in the main experiment, with both messages displayed on the custom-designed chatbot of a fictional e-store. The funniness scale consisted of three items, namely, “The chatbot welcome message was funny,” “The chatbot welcome message was comical,” and “The chatbot welcome message was humorous.”

The manipulation check results showed that the mean perceived funniness of the welcome message in the humorous group (M = 5.18, SD = 1.509, N = 33) was significantly higher than that of the control group (M = 3.59, SD = 2.148, N = 34), with t(65) = 3.504 and p < 0.0001. These findings provide evidence that the humorous and neutral welcome messages were perceived differently, as intended.

2.4 Measurement

Measures were adopted from the following scales: user engagement (UES) by O’Brien et al. (2018); emotional attachment towards the chatbot by Thomson et al. (2005); conversion-to-lead was measured by the intention to give out their email in the survey (see Table 1). For all items, a 7-point Likert scale was applied.

Table 1

Measures

|

Construct |

Items |

Cronbach Alpha |

|

User engagement |

The conversation with the chatbot incited my curiosity. I was really drawn into the conversation with the chatbot. I felt involved in the conversation with the chatbot. |

.898 |

|

Emotional attachment (Connection) |

I felt attached to the chatbot. I felt bonded with the chatbot. I felt connected to the chatbot. |

.935 |

|

Conversion-to-lead |

How likely would you leave your email to the chatbot to receive relevant information about offerings in the future? |

|

User engagement. To measure engagement, 3 items representing the Reward dimension of the user engagement scale by O’Brien et al. (2018) were selected. Our selection of these items was based on several considerations. First, we aimed to focus on the most relevant and reliable indicators of engagement in the context of our research question, which focused on the impact of humour in chatbot greetings on customer emotional attachment/ conversion-to-lead in conversational commerce. Second, these three items captured the critical aspects of intrinsic motivation and enjoyment associated with engagement aligning with the UES reward dimension scale by O’Brien et al. (2018).

Emotional attachment. In this research, one of its elements, the connection, was investigated instead of emotional attachment towards the chatbot. The reasoning is that out of the given items in the scales presented by Thomson et al. (2005), the items for the connection dimension seemed the most appropriate given the nature of the experiment, particularly a relatively short interaction with the chatbot with no relevance given to the brand. The chosen three items measure the connection dimension of emotional attachment and, as Thomson et al. (2005) write, “describe a consumer’s feelings of being joined with the brand” (p. 80).

Conversion-to-lead. Chatbot-human interactions were explored in a simulated website that helps customers find gifts for different occasions. Asking for a piece of personal contact information on a simulated website could potentially skew the results because the participants would likely refrain from typing in their actual emails due to, firstly, privacy concerns and, secondly, because in an experiment, they were not offered anything valuable in exchange of their input (which is usually a significant motivation in real situations). For this reason, the participants were not asked for their contact details by the chatbot but rather for the intention to give out their email in the survey.

3. Research Results

3.1 Participants

There was a total of 227 participants (103 participants in the neutral and 118 in the humorous group). However, a significant number of participants were disqualified for one of the following criteria. First, some participants (2.6%) self-identified as living outside the US. Because different regional backgrounds may affect one’s humour understanding, it was decided to disqualify the non-US residents. Second, a few participants (5.3%) did a double entry and multiple submissions of different responses. Third, some (8.8%) failed the attention check placed in the survey (namely, rated the item “To show that you are paying attention select “strongly agree” as your answer” incorrectly).

Fourth, quite a lot of participants (35.2%) responded to the chatbot in a way that was not congruent with the given task (expressing that they were looking for a gift and seeking help).

The participants that did not satisfy the named criteria were not considered in the final calculation of results. Thereby, the dataset ended with a total of 103 active participants (45.37%). The qualified humorous group consisted of 57 respondents, while the neutral one – of 46 respondents. These numbers correspond approximately to the predetermined quantity of the needed number of participants for this experimental study, which would ensure the validity of the results.

The age distribution in the sample was well-represented across different age groups. In the humorous group, respondents aged 18–20 constituted 1.7 %; 24.6% represented those aged 21–29; 31.4% belonged to the group of 30–39 years old; the age of 25.4% ranged between 40 and 49; 11.0% represented the age group of 50–59, and 5.93% was composed of respondents older than 60. Similarly, the control group had 1.8% respondents aged 18–20; the groups of participants within the range of 21–29 years old and the age between 30 and 39 were equally represented (33.9%); 15.6% were between 40 and 49 years old; 9.2% comprised the group of those aged 50–59, and 5.5% consisted of respondents older than 60. The minimum age cluster in the dataset was 18–20, and the maximum was 60+.

3.2 Results

The results of running the PROCESS SPSS macro (Model 4; Hayes, 2018; Preacher & Hayes, 2004) to test the hypotheses are presented in the following section. The independent variables were condition (humour vs neutral) and self-reported engagement (reward). The dependent variables were emotional attachment (connection) and conversion-to-lead. Five thousand bootstrap samples were used with confidence intervals of 95%.

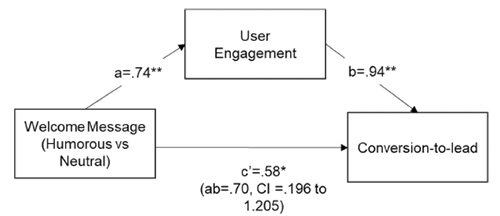

Firstly, we tested the hypotheses of Conceptual Model 1. The empirical results of Conceptual Model 1 are presented in Figure 2. We observed that using humour in the welcome message increases conversion-to-lead (B=.58, standard error [SE]=.27, t(1, 101)=2.14, p<.05). These results support hypothesis H3a “A humorous (vs neutral) chatbot welcome message increases conversion-to-lead”. In addition, the inclusion of a humorous welcome message in chatbot significantly enhances user engagement (path a: B= .74, standard error [SE]= .26, t(1, 101)=2.81, p<.05), which supports the hypothesis H1 “A humorous (vs neutral) chatbot welcome message increases user engagement”. Furthermore, such user engagement predicts conversion-to-lead (path b: B= .94, standard error [SE] = .10, t(1, 101)=9.55, p<.01). To assess whether the impact of humour in the welcome message on conversion-to–lead is mediated by user engagement, we performed a mediation analysis. This analysis showed that the impact of using humour in the welcome message on conversion-to–lead is mediated by user engagement. That is, the indirect effect of using humour in the welcome message on conversion-to-lead via user engagement was significant, and the 95% confidence interval did not include zero (path ab: B=.70, standard error [SE]= .26, CI=.196 to 1.205). These findings provided support for hypothesis H3b “Humorous (vs neutral) welcome message of a chatbot increases user engagement, and in turn, engagement positively impacts conversion-to-lead”.

Figure 2

Empirical Results of Conceptual Model 1

Note. * p<.05, ** p<.01; unstandardized coefficients are reported.

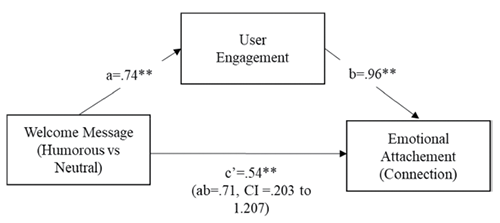

Next, we tested the hypotheses of Conceptual Model 2 (see Figure 3). We observed that using humour in the welcome message increases emotional attachment (B=.54, standard error [SE]=.20, t(1, 101)=2.70, p<.01). These results support hypothesis H2a “A humorous (vs neutral) chatbot welcome message increases emotional attachment”. We also found that using humour in the welcome message leads to higher user engagement (path a: B= .74, SE=.26, t(1, 101)=2.81, p<.01), which supports hypothesis H1. Furthermore, such engagement predicts emotional attachment (path b: B=.96, SE=.07, t(1, 101)=13.22, p<.01). To assess whether the impact of humour in the welcome message on emotional attachment is mediated by user engagement, we performed a mediation analysis. This analysis showed that the impact of using humour in the welcome message on emotional attachment is mediated by user engagement. That is, the indirect effect of using humour in the welcome message on emotional attachment via user engagement was significant, and the 95% confidence interval did not include zero (path ab: B=.71, SE=.26, CI = .203 to 1.207). These findings provided support for hypothesis H2b “Humorous (vs neutral) welcome message of a chatbot increases user engagement, and in turn, engagement positively impacts emotional attachment”.

Figure 3

Empirical Results of Conceptual Model 2

Note. * p<.05, ** p<.01; unstandardized coefficients are reported.

4. Discussion and Conclusions

4.1 Discussion

The main goal of the research was to answer the following research question: How does humour in a chatbot welcome message influence users’ emotional attachment and conversion-to-lead through the mediating role of user engagement?

From the experimental analysis, it can be concluded that humour in a chatbot welcome message (humorous vs neutral) positively influences customers’ emotional attachment and conversion-to-lead via user engagement as a mediator. As literature is relatively scarce in human–chatbot interactions, this research looked to other fields to find inspiration for research hypotheses. CASA theory (Nass et al., 1994; Gambino et al., 2020), in particular, acted as glue in transferring distant disciplines over to the hypothesised ideas in the domain of chatbot–human interactions, particularly concerning the relationships between humour and engagement, engagement and emotional attachment as well as conversion-to-lead.

The investigated relationships results indicated positive indirect effects, meaning that mediation through engagement occurred in every single one of them. Even though humour accounted for 7.3% of engagement (mediator), a seemingly higher percentage of the variability observed in the dependent variables was explained when considering humour and engagement together, with 68.4% for emotional attachment and 53.5% for conversion-to-lead. It means that whereas humour predicts emotional attachment and conversion-to-lead, engagement as a mediator brings much of the prediction effect in the equations. Out of the investigated relationships that indicated positive direct effects, humour accounted for 11.14% of the change in conversion-to-lead and 13.3% in emotional attachment.

Chatbots often fail to meet users’ expectations (Gentsch, 2019; Klein et al., 2019). Humour could therefore come in handy as a tool in compensating for the loss of trust in chatbots due to the technical errors and inaccuracies that chatbots still so often display (Adam et al., 2020).

It could be therefore concluded that the most robust pattern out of the investigated relationships that were found to be significant was both direct and indirect relationship between humour and emotional attachment, however, not much more than the relationship between humour and conversion-to-lead. These results were not unexpected, as the humour probably has a more direct effect on the emotional attachment. However, if the interaction of the experiment was longer, an increase in conversion-to-lead could also be expected, as convincing of the brand benefits potentially takes more time than forming an emotional impression, such as the feeling of closeness.

4.2 Theoretical Contributions

This research made several theoretical contributions. Firstly, by expanding the knowledge of what effects humour has on the different consumer relational and behavioural outcomes, it has contributed to the fast-growing field of human–computer interaction and chatbot research. This research presents the viewpoint of humour as a promising factor in increasing emotional attachment and conversion-to-lead through the mediating role of engagement, indicating that humour may play a greater role in facilitating businesses’ CRM goals in the future. This has ramifications for CRM research since it advances our knowledge of how businesses can leverage CRM by incorporating humour in their chatbot–user communications.

Another area in which this research provides a theoretical contribution is the idea of using chatbots for marketing purposes instead of solely for customer service. Because chatbot technologies have not been researched much in conjunction with marketing, this research demonstrates that the conversational part of customer interaction should be considered an essential part of the means to reach marketing goals using automated technology. As the results indicate, investing the effort in incorporating humour and increasing engagement in automated chatbot conversations with customers may increase conversion-to-lead, among others. Conversion-to-lead is one of the most critical performance metrics in marketing as it allows gathering contact information of customers which then allows marketers to begin targeted and potentially personalised marketing efforts. This is a critical part of marketing, especially CRM: personalising client interactions and connections. This discovery has ramifications for CRM research since it establishes a link between CRM and automated chatbot technology. Therefore, this may pave the way for more studies in this realm.

By facilitating humour, it is possible to increase emotional attachment and conversion-to-lead through engagement as well as emotional attachment and conversion-to-lead directly. This has ramifications for user engagement, emotional attachment, and conversion-to-lead since it demonstrates that they may be supported without the need for human interaction during client encounters. However, this conclusion is only halfway there; further study is needed to examine these effects from the perspective of customers who engage with the technologies.

4.3 Managerial Implications

As mentioned earlier in the research, chatbots have been more extensively investigated in computer science and customer service, such as customer perceptions of chatbot assistance with customer enquiries, user-friendliness, perceived helpfulness, etc. (Chung et al., 2018; Følstad et al., 2018). Given that the primary purpose of chatbots has been to help consumers with various concerns, the marketing viewpoint on chatbots has been a secondary emphasis area.

While chatbot suppliers see chatbots as completely integrated with enterprises’ CRM systems, the firms either lack the competence or knowledge to do so. The findings of this research are, therefore, a stepping stone to building the knowledge for companies utilising their chatbots for marketing. The recommendation that flows from this research is that businesses who use chatbots apply the theoretical insight into their A/B testing frameworks as well as do more tests on the chatbot capabilities and incorporate chatbots into their CRM systems. This would allow for bigger-scale marketing campaigns using chatbots, thereby increasing return on marketing spending while also enabling cost savings and re-allocation of resources, such as reallocating employees to do more complex tasks.

Another implication of this research regards the use of humour in chatbots. While the research demonstrates that using humour in chatbots increases engagement, thereby increasing emotional attachment and conversion-to-lead, the literature points out that the use of humour is a rather complex issue. Research shows that there are various types of humour, which this research did not go into deep, with varying degrees of effects on consumer behaviour, with some being negative. While the recommendation for businesses that employ chatbots is to incorporate elements of humour in their communications with their clients as well as potential leads to reach better engagement and increase lead generation, it is advised that they use humour cautiously.

As previously said, humour is received differently in various cultures (Jiang et al., 2019); therefore, it is critical that humour be used in a manner that makes contextual sense, is not distracting, and aids the consumer in naturally progressing through the sales funnel. Utilising humour in formulating a welcome message sent to first-time visitors is a naturally safe stance to take since it serves as a conversation starter and is independent of the website visitor’s prior answers. However, as the literature indicates, the substance and tone of humour should be compatible with the brand.

4.4 Limitations and Future Research

One limitation of the research is the small sample size. Even though the number of respondents for each group was above the minimum needed to ensure validity, it is possible that a higher number would have yielded a better result.

The length of the experiment itself was another limitation. To limit the influencing factors concerning dependent variables, the interaction with the chatbot was designed to be as minimal as possible. However, a more extended chatbot design could have given more time and chances for respondents to interact with the technology, which could have given more data, primarily behavioural, and in turn, could have given more insight into the effects of humour in chatbot–user interactions.

Furthermore, the measurement approach used in this study has limitations. More comprehensive and validated measures of customer engagement should be considered in future research. For instance, the Hollebeek et al. (2014) scale or other recommended scales identified by Hollebeek et al. (2023) could be used to provide a more robust and validated measurement of customer engagement.

The type of shopping task could be a potential moderator for the effect of humour on conversion-to-lead and emotional connection. Future research could investigate the impact of humour in different task contexts, such as utilitarian versus hedonic tasks, to determine if the effect of humour varies based on the type of a shopping task.

Further studies could be conducted to explore how humour increases the human likeness of chatbots and its impact on acceptance and likeliness. Comparisons between how people interact with chatbots and humans in similar settings could also be explored.

It would be interesting to investigate if the benefits of employing humour in conversing with customers can also be transferred to conversations with the company’s employees using chatbots for internal communications and employee engagement initiatives. This could provide insights into how chatbots can be used for employee engagement and how they can improve communication within organisations.

Future research should consider the impact of humour in negative settings, such as insurance claims or service recovery. This could help companies develop appropriate communication strategies to enhance customer experiences and outcomes in challenging interactions. Additionally, it would be valuable to explore the potential differences in the type or style of humour that may be more effective in negative versus positive settings.

References

Adam, M., Wessel, M., & Benlian, A. (2020). AI-based chatbots in customer service and their effects on user compliance. Electronic Markets, 31(2). https://doi.org/10.1007/s12525-020-00414-7

Algesheimer, R., Dholakia, U. M., & Herrmann, A. (2005). The Social Influence of Brand Community: Evidence from European Car Clubs. Journal of Marketing, 69(3), 19–34. https://doi.org/10.1509/jmkg.69.3.19.66363

Araujo, T. (2018). Living up to the chatbot hype: The influence of anthropomorphic design cues and communicative agency framing on conversational agent and company perceptions. Computers in Human Behavior, 85, 183–189. https://doi.org/10.1016/j.chb.2018.03.051

Babu, S., Schmugge, S., Barnes, T., & Hodges, L. F. (2006). “What Would you Like to Talk About?” An Evaluation of Social Conversations with a Virtual Receptionist. In J. Gratch, M. Young, R. Aylett, D. Ballin, & P. Olivier (Eds.), Intelligent Virtual Agents IVA 2006 (pp. 169–180). Springer. https://doi.org/10.1007/11821830_14

Berinsky, A. J., Huber, G. A., & Lenz, G. S. (2012). Evaluating Online Labour Markets for Experimental Research: Amazon.com’s Mechanical Turk. Political Analysis, 20(3), 351–368. https://doi.org/10.1093/pan/mpr057

Bompar, L., Lunardo, R., & Saintives, C. (2018). The effects of humour usage by salespersons: The roles of humour type and business sector. Journal of Business & Industrial Marketing, 33(5), 599–609. https://doi.org/10.1108/jbim-07-2017-0174

Bowlby, J. (1980). Loss: Sadness and Depression. (Attachment and Loss Series, Vol. 3). New York: Basic Books. https://doi.org/10.1093/sw/26.4.355

Brodie, R. J., Hollebeek, L. D., Jurić, B., & Ilić, A. (2011). Customer Engagement: Conceptual Domain, Fundamental Propositions, and Implications for Research. Journal of Service Research, 14(3), 252–271 https://doi.org/10.1177/1094670511411703

Brodie, R. J., Ilic, A., Juric, B., & Hollebeek, L. (2013). Consumer engagement in a virtual brand community: An exploratory analysis. Journal of Business Research, 66(1), 105–114. https://doi.org/10.1016/j.jbusres.2011.07.029

Brysbaert, M. (2019). How Many Participants do we Have to Include in Properly Powered Experiments? A Tutorial of Power Analysis with Reference Tables. Journal of Cognition, 2(1), Article 16. https://doi.org/10.5334/joc.72

Calder, B. J., Malthouse, E. C., & Schaedel, U. (2009). An Experimental Study of the Relationship between Online Engagement and Advertising Affectiveness. Journal of Interactive Marketing, 23(4), 321–331. https://doi.org/10.1016/j.intmar.2009.07.002

Chiew, T. M., Mathies, C., & Patterson, P. (2019). The effect of humour usage on customer’s service experiences. Australian Journal of Management, 44(1), 109–127. https://doi.org/10.1177/0312896218775799

Choi, Y., K., Gordon E., M., Frank, B. (2001). The Effects of Anthropomorphic Agents on Advertising Effectiveness and the Mediating Role of Presence. Journal of Interactive Advertising, 2(1), 19–32. https://doi.org/10.1080/15252019.2001.10722055

Chung, M.-J., Ko, E.-J., Joung, H.-R., & Kim, S.-J. (2018). Chatbot e-service and customer satisfaction regarding luxury brands. Journal of Business Research, 117, 587–595. https://doi.org/10.1016/j.jbusres.2018.10.004

Ciechanowski, L., Przegalinska, A., Magnuski, M., & Gloor, P. (2019). In the Shades of the Uncanny Valley: An Experimental Study of Human–Chatbot Interaction. Future Generation Computer Systems, 92, 539–548. https://doi.org/10.1016/j.future.2018.01.055

Cline, T. W., & Kellaris, J. J. (2007). The Influence of Humor Strength and Humor—Message Relatedness on Ad Memorability: A Dual Process Model. Journal of Advertising, 36(1), 55–67. https://doi.org/10.2753/joa0091-3367360104

de Oliveira Santini, F., Ladeira, W. J., Pinto, D., Herter, M. M., Sampaio, C. H., Babin, B. J. (2020). Customer engagement in social media: A framework and meta-analysis. Journal of the Academy of Marketing Science, 48, 1211–1228. https://doi.org/10.1007/s11747-020-00731-5

discover.bot. (2021, July 20). Chatbot welcome message examples to help your bot greet visitors Better. Bots for Businesses. https://discover.bot/bot-talk/chatbot-welcome-visitor/

Dormann, C., & Biddle, R. (2007). Humour in game‐based learning. Learning, Media and Technology, 31(4), 411–424. https://doi.org/10.1080/17439880601022023

Đuka, I., & Njeguš, A. (2021). Conversational Survey Chatbot: User Experience and Perception. In Proceedings of the International Scientific Conference Sinteza 2021. https://doi.org/10.15308/sinteza-2021-322-327

Edlund, P., & Holmner Härgestam, A. (2020). Customer Relationship Management and Automated Technologies: A Qualitative Study on Chatbots’ Capacity to Create Customer Engagement. [Master’s thesis, Umeå University]. https://urn.kb.se/resolve?urn=urn:nbn:se:umu:diva-172386

Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On Seeing Human: A Three-Factor Theory of Anthropomorphism. Psychological Review, 114(4), 864–886. https://doi.org/10.1037/0033-295X.114.4.864

Everett, J., Pizarro, D., & Crockett, M. (2017, April 24). Why are we reluctant to trust robots? The Guardian. https://www.theguardian.com/science/head-quarters/2017/apr/24/why-are-we-reluctant-to-trust-robots.

Fink, J. (2012). Anthropomorphism and Human Likeness in the Design of Robots and Human-Robot Interaction. In S. S. Ge, O. Khatib, J. J. Cabibihan, R. Simons, & M. A. Williams (Eds.), Social Robotics (ICSR 2012. Lecture Notes in Computer Science, vol. 7621, pp. 199–208). Springer. https://doi.org/10.1007/978-3-642-34103-8_20

Følstad, A., Nordheim, C. B., & Bjørkli, C. A. (2018, October). What Makes Users Trust a Chatbot for Customer Service? An Exploratory Interview Study. In Proceedings of the Fifth International Conference on Internet Science – INSCI 2018 (pp. 194–208). Springer.

Gambino, A., Fox, J., & Ratan, R. A. (2020). Building a Stronger CASA: Extending the Computers Are Social Actors Paradigm. Human–Machine Communication, 1, 71–85. https://search.informit.org/doi/abs/10.3316/INFORMIT.097034846749023

Ge, J. (2017). Humour in Customer Engagement on Chinese Social Media – A Rhetorical Perspective. European Journal of Tourism Research, 15, 171–174. https://doi.org/10.54055/ejtr.v15i.270

Gentsch, P. (2019). Künstliche Intelligenz für Sales, Marketing und Service. Springer Fachmedien Wiesbaden. https://doi.org/10.1007/978-3-658-25376-9

Gummesson, E. (2017). From relationship marketing to total relationship marketing and beyond. Journal of Services Marketing, 31(1), 16–19. https://doi.org/10.1108/jsm-11-2016-0398

Han, M. C. (2021). The Impact of Anthropomorphism on Consumers’ Purchase Decision in Chatbot Commerce. Journal of Internet Commerce, 20(1), 46–65. https://doi.org/10.1080/15332861.2020.1863022

Han, X., Zhou, M., Turner, M. J., & Yeh, T. (2021). Designing Effective Interview Chatbots: Automatic Chatbot Profiling and Design Suggestion Generation for Chatbot Debugging. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3411764.3445569

Hayes, A. F. (2018). Partial, conditional, and moderated mediation: Quantification, inference, and interpretation: Quantification, inference, and interpretation. Communication Monographs, 85(1), 4–40. https://doi.org/10.1080/03637751.2017.1352100

Hoad, C., Deed, C., & Lugg, A. (2013). The Potential of Humor as a Trigger for Emotional Engagement in Outdoor Education. Journal of Experiential Education, 36(1), 37–50. https://doi.org/10.1177/1053825913481583

Hollebeek, L.D., Glynn, M.S. & Brodie, R.J. (2014). Consumer Brand Engagement in Social Media: Conceptualization, Scale Development and Validation. Journal of Interactive Marketing, (28)2, 149–165. https://doi.org/10.1016/j.intmar.2013.12.002

Hollebeek, L. D., Sarstedt, M., Menidjel, C., Sprott, D. E., & Urbonavicius, S. (2023). Hallmarks and potential pitfalls of customer- and consumer engagement scales: A systematic review. Psychology and Marketing, 40(6), 1074–1088. https://doi.org/10.1002/mar.21797

Holtgraves, T. M., Ross, S. J., Weywadt, C. R., & Han, T. L. (2007). Perceiving artificial social agents. Computers in Human Behavior, 23(5), 2163–2174. https://doi.org/10.1016/j.chb.2006.02.017

Hopp, T., & Gallicano, T. D. (2016). Development and test of a multidimensional scale of blog engagement. Journal of Public Relations Research, 28(3/4), 127–145. https://doi.org/10.1080/1062726X.2016.1204303

Jain, M., Kumar, P., Kota, R., & Patel, S. N. (2018). Evaluating and Informing the Design of Chatbots. In Proceedings of the 2018 on Designing Interactive Systems Conference 2018 - DIS ’18 (pp. 895–906). https://doi.org/10.1145/3196709.3196735

Jiang, T., Li, H., & Hou, Y. (2019). Cultural Differences in Humor Perception, Usage, and Implications. Frontiers in Psychology, 10. https://doi.org/10.3389/fpsyg.2019.00123

Jotform (2021, December 8). Lead Generation: Everything You Need to Know to Grow a Business. Jotform. https://www.jotform.com/lead-generation/

Kaczorowska-Spychalska, D. (2019). How chatbots influence marketing. Management, 23(1), 251–270. https://doi.org/10.2478/manment-2019-0015

Kaptein, M., Markopoulos, P., de Ruyter, B., & Aarts, E. (2011). Two acts of social intelligence: The effects of mimicry and social praise on the evaluation of an artificial agent. AI and Society, (26)3, 261–273, https://doi.org/10.1007/s00146-010-0304-4

Khanna, A., Pandey, B., Vashishta, K., Kalia, K., Pradeepkumar, B., & Das, T. (2015). A Study of Today’s A.I. through Chatbots and Rediscovery of Machine Intelligence. International Journal of U- and E-Service, Science and Technology, 8(7), 277–284. https://doi.org/10.14257/ijunesst.2015.8.7.28

Klein, R., Schulz, M., Reinkemeier, F., Dill, F., Schäfer, A., & Hoffmann, P. (2019). Chatbots im E-Commerce: Desillusion oder großes Potenzial? (D. P. Elaboratum (Ed.)). https://www.chatbot-studie.de/

Kleine, S. S., III, R. E. K., & Allen, C. T. (1995). How is a Possession “Me” or “Not Me”? Characterizing Types and an Antecedent of Material Possession Attachment. Journal of Consumer Research, 22(3), 327–343. https://doi.org/10.1086/209454

Kull, A. J., Romero, M., & Monahan, L. (2021). How may I help you? Driving brand engagement through the warmth of an initial chatbot message. Journal of Business Research, 135, 840–850. https://doi.org/10.1016/j.jbusres.2021.03.005

Letheren, K., Glavas, C. (2017). Embracing the bots: How direct to consumer advertising is about to change forever. The Conversation.

Liu, C., Ishi, C. T., Ishiguro, H., & Hagita, N. (2012, March). Generation of nodding, head tilting and eye gazing for human-robot dialogue interaction. In 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI)(pp. 285-292).

Lunardo, R., Bompar, L., & Saintives, C. (2018). Humor usage by sellers and sales performance: The roles of the exploration relationship phase and types of humor. Recherche et Applications En Marketing (English Edition), 33(2), 5–23. https://doi.org/10.1177/2051570718757905

Medhi Thies, I., Menon, N., Magapu, S., Subramony, M., & O’Neill, J. (2017). How Do You Want Your Chatbot? An Exploratory Wizard-of-Oz Study with Young, Urban Indians. In Human-Computer Interaction - INTERACT 2017, 10513, (pp. 441–459). https://doi.org/10.1007/978-3-319-67744-6_28

Mordor Intelligence (2022). Chatbot market | Growth, trends, and forecast (2022–2027). Mordorintelligence.com. https://www.mordorintelligence.com/industry-reports/chatbot-market.

Morkes, J., Kernal, H. K., & Nass, C. (1999). Effects of Humor in Task-Oriented Human-Computer Interaction and Computer-Mediated Communication: A Direct Test of SRCT Theory. Human–Computer Interaction, 14(4), 395–435. https://doi.org/10.1207/s15327051hci1404_2

Nass, C., & Moon, Y. (2000). Machines and Mindlessness: Social Responses to Computers. Journal of Social Issues, 56(1), 81–103. https://doi.org/10.1111/0022-4537.00153

Nass, C., Moon, Y., & Carney, P. (1999). Are People Polite to Computers? Responses to Computer-Based Interviewing Systems. Journal of Applied Social Psychology, 29(5), 1093–1109. https://doi.org/10.1111/j.1559-1816.1999.tb00142.x

Nass, C., Steuer, J., & Tauber, E. R. (1994, April). Computers are social actors. In Proceedings of the SIGCHI Conference on Human Factors in Ccomputing Systems (pp. 72–78. https://dl.acm.org/doi/pdf/10.1145/191666.191703

Neururer, M., Schlögl, S., Brinkschulte, L., & Groth, A. (2018). Perceptions on Authenticity in Chat Bots. Multimodal Technologies and Interaction, 2(3), 60. https://doi.org/10.3390/mti2030060

O’Brien, H. L., Cairns, P., & Hall, M. (2018). A practical approach to measuring user engagement with the refined user engagement scale (UES) and new UES short form. International Journal of Human-Computer Studies, 112, 28–39. https://doi.org/10.1016/j.ijhcs.2018.01.004

O’Brien, H. L., & Toms, E. G. (2010). The development and evaluation of a survey to measure user engagement. Journal of the American Society for Information Science and Technology (JASIST), 61(1), 50–69. https://doi.org/10.1002/asi.21229

Preacher, K. J., & Hayes, A. F. (2004). SPSS and SAS procedures for estimating indirect effects in simple mediation models. Behavior Research Methods, Instruments, & Computers, 36(4), 717–731. https://doi.org/10.3758/BF03206553

Ptaszynski, M., Dybala, P., Higuhi, S., Shi, W., Rzepka, R., & Araki, K. (2010). Towards Socialized Machines: Emotions and Sense of Humour in Conversational Agents. In Web Intelligence and Intelligent Agents (pp. 173–205). https://doi.org/10.5772/8384

Qiu, L., & Benbasat, I. (2009). Evaluating Anthropomorphic Product Recommendation Agents: A Social Relationship Perspective to Designing Information Systems. Journal of Management Information Systems, 25(4), 145–182

Robinson, S., Orsingher, C., Alkire, L., De Keyser, A., Giebelhausen, M., Papamichail, K. N., Shams, P., & Temerak, M. S. (2020). Frontline encounters of the AI kind: An evolved service encounter framework. Journal of Business Research, 116, 366–376. https://doi.org/10.1016/j.jbusres.2019.08.038

SendPulse. (2021, October 28). What is a marketing lead? - Definition and guide. Glossary. https://sendpulse.com/support/glossary/marketing-lead

Sensuse, D. I., Dhevanty, V., Rahmanasari, E., Permatasari, D., Putra, B. E., Lusa, J. S., Misbah, Stack, M. (2020, December 14). What’s the difference between customer engagement and leads? CallRail. https://www.callrail.com/blog/customer-engagement-vs-leads/

Thomas, P., Czerwinski, M., McDuff, D., Craswell, N., & Mark, G. (2018). Style and Alignment in Information-SCeking conversation. In Proceedings of the 2018 Conference on Human Information Interaction & Retrieval - CHIIR ’18 (pp. 42–51). https://doi.org/10.1145/3176349.3176388

Thomson, M., MacInnis, D. J., & Park, C. W. (2005). The Ties That Bind: Measuring the Strength of Consumers’ Emotional Attachments to Brands. Journal of Consumer Psychology, 15(1), 77–91. https://doi.org/10.1207/s15327663jcp1501_10

Thorbjørnsen, H., Supphellen, M., Nysveen, H., & Pedersen, P. E. (2002). Building brand relationships online: A comparison of two interactive applications. Journal of Interactive Marketing, 16(3), 17–34. https://doi.org/10.1002/dir.10034

Touré-Tillery, M. & McGill, A. (2015). Who or What to Believe: Trust and Differential Persuasiveness ofHhuman and Anthropomorphized Messengers. Journal of Marketing, 79(4), 94–110. DOI: 10.1177/00222429211045687

Van Pinxteren, M. M. E., Pluymaekers, M., & Lemmink, J. G. A. M. (2020). Human-like communication in conversational agents: A literature review and research agenda. Journal of Service Management, 31(2), 203–225. https://doi.org/10.1108/josm-06-2019-0175

Waytz, A., Heafner, J., & Epley, N. (2014). The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. Journal of Experimental Social Psychology, 52(52), 113–117. https://doi.org/10.1016/j.jesp.2014.01.005.

Xie, T., & Pentina, I. (2022). Attachment Theory as a Framework to Understand Relationships with Social Chatbots: A Case Study of Replika. In Proceedings of the Annual Hawaii International Conference on System Sciences. https://doi.org/10.24251/hicss.2022.258

Yuen, M. (2022, April 15). Chatbot market in 2022: Stats, trends, and companies in the growing AI chatbot industry. Insider Intelligence. https://www.insiderintelligence.com/insights/chatbot-market-stats-trends/.

Zumstein, D., & Hundertmark, S. (2018). Chatbots: An Interactive Technology for Personalized Communication and Transaction. IADIS International Journal on WWW/Internet, 15 (1), 96–109.

Appendix

An Example of a Chatbot Flow (Humorous Stimulus)